By Jay Budzik

In recent weeks an incredible amount of attention has focused on generative AI technologies like ChatGPT, and the recently launched GPT-4. However impressive these models are, they are still somewhat rough around the edges. To wit: just ask ChatGPT to explain long division with decimals to a grade schooler. The answer will be incorrect and full of inaccurate examples that would easily confuse a novice trying to learn:

Chat GPT: For example, if we are dividing 10 by 3 using long division, and we get the answers 3, 3, and 1 above the digits, we know that the divisor (3) goes into the dividend (10) three times, with a remainder of 1. So the quotient is 3 + 3 + 0.1 (which is the same as 0.3) = 3.3.

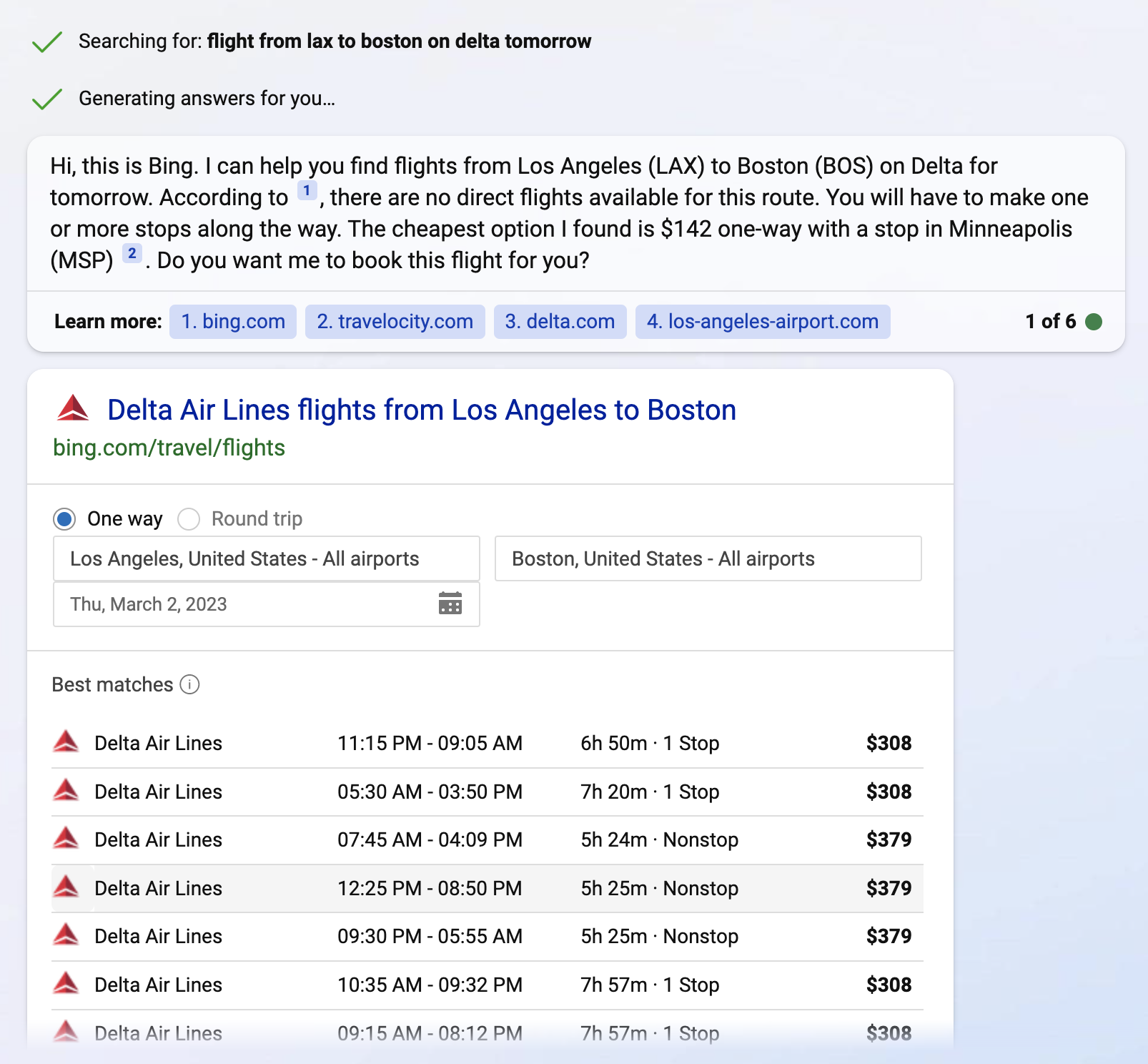

Microsoft poured $10B into the company, and rushed its ChatGPT-powered version of the Bing search engine to market. After initially receiving glowing reviews, it became clear that more testing and refining would be needed for the new AI-powered search engine to live up to the hype. Like the Lenza generative AI that came before it, which struggled to keep the avatars it output PG, Bing’s implementation also had issues that even tech giant Microsoft couldn’t smooth out in time to avoid a somewhat embarrassing series of reports about unsettling interactions with the chatbot. Indeed, ask Bing for help scheduling a flight, and the answer you get might be far from correct:

Modern AI systems are truly remarkable, but it’s easy to get carried away when the system performs well on “happy path” scenarios, and become overconfident it’s ready for prime-time. This kind of optimism is all too familiar to any practitioner who has deployed AI systems in real-world settings.

The right deployment context matters

Even in its current, imperfect form, generative AI can still be very useful. For example, generative AI has been reliably used for years to create more realistic speech from text, to draft marketing copy that can be checked and edited before use, and more recently, to interactively recommend the next line of code which a developer can decide to use or not. Generative AI is being adopted by Hollywood to bring a younger version of Tom Hanks to life in the upcoming film Here which undoubtedly will be edited by dozens of humans to correct any errors that might crop up in the process. Even Lenza put a human in the loop when they allowed users to select which avatar they liked the best. “Human in the loop” is one solution for AI’s rough edges. The other is vertical or domain specific applications.

Consider vertical applications that are more easily verified

Vertical applications of generative AI hold much near-term promise, because the AI need not be an expert in everything, and a narrower use case is naturally easier to test. For example, large businesses have already adopted AI chatbots to reduce customer service costs, and the next generation of these bots will undoubtedly be powered by generative AI, to provide a more satisfying and realistic user experience. Because the questions you can ask a bank’s customer service chatbot or call center are relatively limited in scope, the answers can more easily be verified. And because the subject matter your virtual customer service rep is expected to cover is relatively limited, it’s easier to put in guardrails to prevent embarrassing situations when the user asks questions that are far afield from the AI’s designed purpose. The same holds for sales productivity reports, business financial reporting and summarization, etc.

Any experienced AI practitioner will tell you that the last 20% of quality or accuracy takes 80% of the time and budget (like in any engineering project). For Ai projects though, the key question is 20% of what? If the problem is too big, or too high stakes, that 20% can quickly become unmanageable. We have witnessed this in the self-driving car hype that is taking years longer than originally promised to come to fruition. In high stakes scenarios, e.g., when human lives are at stake, or big financial risks are being made, it’s really important the system be thoroughly tested. Yet, more thorough testing typically generates more issues that need to be fixed, resulting in delays, which drive up costs. Of course, fixing the self-driving car so that it doesn’t suddenly brake in the middle of an intersection for no apparent reason is necessary, but the steady stream of unanticipated problems like these can lead to a money pit of engineering cost, especially when the tooling required to debug and correct AI models is still relatively underdeveloped.

While ambitious, high-stakes, general-purpose AI problems like these are worth solving, and much progress has been made, a less expensive and less risky path is to focus on better constrained, vertical applications that can be enriched with domain knowledge, and for which a more comprehensive suite of test cases can be more easily produced, even when the stakes are high. This is the approach I took at Zest AI, where we built AI systems that automatically generate credit underwriting models that move 100s of billions USD. Because the credit underwriting problem domain is relatively constrained, it was possible to enrich our automation tools with domain semantics. This domain knowledge complemented algorithmic learning algorithms to ensure the models our automated pipelines produced learned what we wanted them to learn, and could be made transparent to human reviewers so they could be validated.

Apply a risk management framework to your AI investment decisions

The more constrained the problem, the easier it is to get the AI to work, and to verify that it is indeed working. The less constrained the problem is, the more investment will be required to gather data, design the algorithms, train and retrain the AI, and all that effort drives up data science headcount and timelines. Moreover, testing costs balloon with less constrained problems, because a human has to verify the outcome is correct, and in an unconstrained problem domain, even just a 1% sample of potential outcomes is significant (after all, infinity times a constant is still infinity). When stakes are high, and the cost of a bad outcome is high, these costs compound. Add in regulation that requires independent validation and verification, and the costs can get very large very quickly.

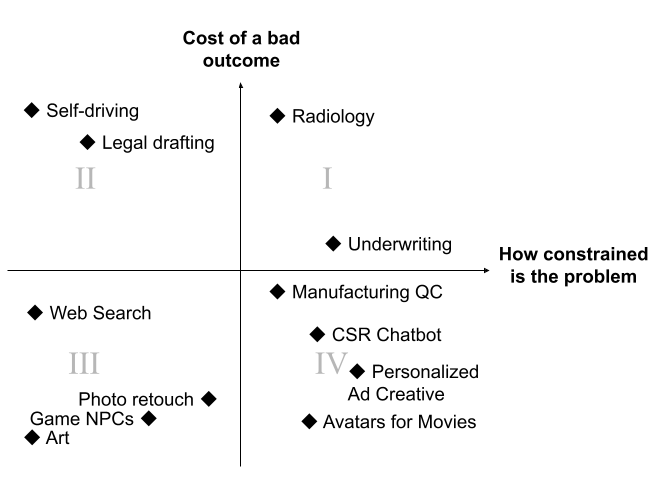

The risk framework below can help assess AI-driven use cases:

Most successful AI applications hang out in quadrant IV, where the stakes are low and the problems are well-constrained. The board game checkers, for example, which has no-cost bad outcomes, and is highly constrained, was solved by Arthur Samuel in 1959. AI has tackled successively harder problems ever since, and there is still plenty of value to be created solving constrained, low-stakes problems, where each individual AI-driven output doesn’t matter that much, but the aggregate outcome can be improved as a result of incremental accuracy. For example, when you upgrade from basic rules-based or linear modeling methods to machine learning models, it can generate significant improvements in business outcomes. This has led to successful applications of AI in progressively higher stakes problems like underwriting and computer-assisted radiology, where AI can make more accurate predictions on its own than a human could (as in the case of automated underwriting) and in high stakes decision support scenarios where there is a human in the loop (as in computer-assisted radiology).

In recent years, practitioners have started to focus on less constrained problems, like the kinds of applications we see in quadrants II and III. Novel deep learning, attention mechanisms and adversarial training methods allow for the construction of generative AI models that produce human-like outputs. When getting it wrong has insignificant consequences, generative AI products have already been brought to market with varying success. For example, generative adversarial networks (GANs) are already built into widely-used products like Google Pixel where they are used to de-blur, de-noise, and correct the lighting in photos you take, and users love the result. If the process doesn’t work, the original photo is still available. But more ambitious applications have mixed results, which is a problem when your reputation is based on the accuracy of your answers. Even though the consequence of one bad search answer is relatively small, factual errors in Google’s Bard announcement led to $100 billion in market cap disappearing overnight.

There is plenty of money to be made where AI can be used to create unique experiences people will pay for or that can be monetized by advertising, even when the stakes are relatively low. For example, we are sure to see a slew of video games announce they have LLM-enabled NPCs in the coming months, and there will be an ecosystem of tools developed to make adding avatars and bots to various low-stakes experiences easier. I am sure that people will be delighted by these developments, and over time, the solutions may migrate up to Quadrant II.

In Quadrant II, the stakes are high and the problem is unconstrained. This quadrant is the hardest, the highest risk, and the most expensive. Model complexity goes up dramatically. There are networks of models that all need to work together to create the desired outcome. Debugging one model is hard but when you have a complex system of models, things become even harder. For example, consider applying LLMs to a high-stakes task like summarizing medical test results for patients, or drafting legal agreements. For certain types of test results and certain types of legal forms, I bet an out of the box LLM would be pretty good. But when it makes an error, how do you correct it and make sure it is corrected? As a novice, how do you know whether the answer the AI gave is correct or is an error? Hard to say, and I cheated – I moved the problem from Quadrant II to Quadrant I. Until we build up enough confidence and trust in models, there will be a human in the loop reviewing the output, and many AI solutions will be “suck” assisting humans, versus replacing them. That’s not all bad. AI systems in a decision support role, generating first drafts, or in other “human in the loop” scenarios, can still improve efficiency and drive down costs.

Because of the high cost and uncertain timelines, we will likely mostly see tech giants with deep pockets attempt applications in Quadrant II for now. But what will it take to see these high value use cases come to fruition? I will tackle this question in my next post.

While we have seen much progress in generative AI, we still have work to do. General purpose generative AI isn’t ready for prime time yet, but with the right risk management framework, you can pick applications that avoid runaway expenses and are more likely to succeed.

What will it take for general purpose AI to impact high stakes problems?

So far we’ve outlined a risk management framework for AI and encouraged a focus on vertical, constrained applications. This is because general-purpose AI is very hard: humans are very good at qualitative reasoning, “common sense”, and emotional intelligence; computers simply are not. Humans have a notion of what is socially appropriate, about how confident they are in their answers, and they can explain where they learned what they learned and why they think they are right. Most AI can’t do any of this yet. Even just controlling what an AI learns from data is very difficult, but not impossible.

More Progress is Needed on AI Fundamentals and Better Tooling

To make general purpose generative AI a reality for all of the use cases we can dream up for it, without humans in the loop, we need three fundamental technology leaps and some good engineering:

- Introspection, the ability for the AI to understand why it makes decisions and explain them to humans

- The ability to incorporate and enforce logical and qualitative constraints on models

- The ability to better influence what models learn

- Better tooling for simulation and model verification

The good news is, progress is being made in all of these areas, and it’s only a matter of time before effective techniques move out of the lab and make it into industrial applications. These problems create opportunities for companies seeking to solve challenges that will allow next generation AI solutions to flourish.

AI Introspection

Understanding what a model has learned is a fundamental challenge. Model explainability methods based on the mathematics of game theory (e.g., SHAP and Integrated Gradients) have opened up “black box” models like XGBoost and Deep Neural Networks. In the context of tabular data, where each data attribute has known semantics, these techniques are already finding useful applications in industry. But when applied to LLMs and convolutional nets, the results are still difficult to interpret. More progress is needed on LLM and vision model explainability, to summarize the internal representations deep nets have inside them so they can be more easily reviewed in advance of deployment, and in the context of generative models, to explain why a particular output was generated.

While a model may have learned the right thing, getting a vision model to summarize what it learned in a way humans can easily understand remains a significant challenge. Moreover, understanding how data impacts what a model learns is still an open area of investigation. Further research on influence functions is needed to summarize how model outputs relate to training data instances, so models can be more easily corrected by revising the training data. Likewise, more progress is needed to quantify the extent to which a model is confident in its answer, and that it has adequate support in the data for the predictions or outputs it’s generating.

User: You seem pretty confident about that

ChatGPT: Yes, I am confident in my explanation of the long division process for dividing decimals. Please let me know if you have any further questions or if there’s anything else I can help you with!

User: How can you be sure?

Chat GPT: As an AI language model, my responses are generated based on patterns and rules derived from analyzing a large amount of text data. I am designed to provide accurate and consistent information based on the rules and knowledge that I have been trained on.

When a model prediction or output comes with a real (not canned) estimate of confidence, and the reason a model generates an output can really be interrogated, the user can more easily develop an opinion about whether they should trust the answer.

Better model introspection tools will allow the systems these models are embedded in to migrate from low-stakes to higher stakes applications, and allow model developers to more easily correct mistakes made along the way. Solving these problems may also allow the next generation of ML ops companies move beyond the relatively mundane (yet still important) artifact management, experiment tracking, deployment, inference infrastructure use cases.

Incorporating causal and qualitative constraints

While LLMs and GANs can generate some pretty incredible outputs, to operate safely without a human in the loop, model developers need to be able to enforce constraints that ensure they won’t go off the rails. Additional work is needed to add complementary semantic and qualitative reasoning models to statistical learning algorithms in order to prevent bad things from happening. The ability to add simple constraints to ML models is widely available today. For example, directional constraints that control how an input should change a model’s output (known as monotonic constraints) are already commonplace. While this is very useful in the context of tabular data, where the meaning of an input attribute is well-known, and the desired direction of the relationship to the output is also known (e.g., higher prices lead to lower take rates), when we consider more complex models and constraints, things get a bit more difficult. For example, consider how to prevent Lenza from generating sexualized images, or how to ensure ChatGPT gets its arithmetic right. This is not as straightforward as it may seem, and incorporating such qualitative and quantitative models into a statistical learning process is an area of active research that is already showing promise. Tools that allow model developers to easily incorporate such constraints into the model training process will become increasingly important.

Influencing what a model learns

Machine learning practitioners are all too familiar with the age old adage “garbage in, garbage out.” The quality and quantity of training data can have a dramatic impact on model performance, whether the model is used for classification, regression or generation. However, available training data often reflects human bias that needs to be corrected, or simply distributional anomalies due to the particular sensors used to gather the data that won’t generalize to the real world. Influencing what a model learns from data is difficult but not impossible, as a model’s objective function can be modified to incorporate feedback from a critic or adversary. At Zest, we successfully applied adversarial training to generate models that achieved fairer outcomes for women and people of color, while sacrificing very little, if any, predictive accuracy. Other approaches include re-weighting input data, and filtering or correcting model outputs with other models.

Techniques like these can be applied to generative models to ensure the generated outputs meet a desired qualitative bar, and don’t get called “hyper-sexual” “creepy” or “unhinged.” This creates an opportunity for infrastructure companies that allow model developers to more easily incorporate feedback from secondary models to make generative AI applications safer and easier to correct, or companies that provide datasets or canned models that generative AI model developers can use to filter outputs.

Next-generation tooling for reinforcement learning and model validation

To make more rapid progress on AI for high-stakes and less well-constrained problems, we need better tooling to ensure the AI will do what we want it to do. Feedback mechanisms are important not only for verification but also for generating new data that can be used to train the next iterations of models. Simulation allows reinforcement learning systems to explore potential outcomes and receive feedback that enables models to more rapidly learn, but all RL frameworks require a simulator and an outcome measurement. Creating these today is expensive and hard, which creates another opportunity for solutions.

Likewise, without tooling to validate models, it’s difficult to be confident they will perform as expected. This is compounded when you consider generative AI applications in open-ended problem domains. Tools for managing the testing process, generating test cases, tracking regressions and enabling rapid large-scale evaluation of new model iterations will reduce cycle times and speed the generative AI review process, enabling more LLMs and latent diffusion models to make it out of the lab and into production.

Conclusion

While general purpose generative AI isn’t ready for applications that are open-ended, high stakes, and without a human in the loop yet, with further investments in fundamental technologies and tools to help manage AI risks, we will begin to see more and more AI-powered applications have commercial impact.

0 Comments